A/B testing has become a critical tool for B2B and B2C marketers who want to optimize the performance of their email marketing campaigns. The way it works is that through a software tool, a marketer can test 2 variations of an email, popup or landing page, to see which results in higher engagement with their followers. After the winner is determined, you can either set up the system so that the winning version is sent to all your subscribers, or appears on your web page. This can be done manually or through automation.

According to a survey conducted by Econsultancy with client-side marketers, 50% of respondents planned to increase their investment in A/B and multivariate testing in 2015. It’s no surprise that so many companies are jumping on board. A/B testing is a valid, accurate way to take some of the guesswork out of optimizing your campaigns.

Even if you’ve been using A/B testing for some time it’s always a good idea to review the best practices to increase the performance of your email marketing campaigns and stay on top of trends.

Unfortunately, many marketers are still doing random tests without clear goals and direction in mind. They don’t think about the ultimate purpose of the content before executing and in the end, they don’t see any improvement. When you conduct A/B testing, answer realistically each of the questions listed below before you begin, and you’ll start off on the right track towards increasing the success of your campaign.

To get started, ask the following questions:

- What is the goal? For example: Is the goal to gain more signups and boost conversions to my website or to increase revenue?

- How am I going to measure results after implementing A/B testing?

- Did the actual outcomes match my expected goals?

- What things shall I change or improve after this testing?

- Were my assumptions correct? Will my objectives be met? Did I set realistic goals?

After determining your main goals, the next step is deciding what elements should be tested in order to increase your conversions. A conversion is another way of saying the number of people who complete the goal.

After carefully analyzing your overall performance and determining which metric you would like to improve, you can get started on setting up your A/B testing on your email campaign.

Below we go through 5 simple steps to setting up testing using marketing automation. We’ll also go over how to measure results and successfully increase performance over time.

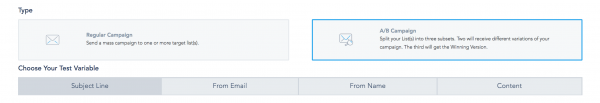

1. Define the element

You can test different elements for an email, including:

- Subject line

- Email sender

- Sender’s name

- Email content

Determining which piece to test first can be a mixture of some research into what elements affect different metrics such as open rate and click through rate. For instance, the subject line and email sender has a huge impact on your open rate, and the CTA will have a significant impact on your click-through-rate. These items however, will not have as much impact on both of those metrics, only one.

An example of how this works in setting up an A/B test is what we did internally at VBOUT. We were sending out a lot of email campaigns and noticed that our open rates were low; however, of those emails which were opened, the percentage for the click-through was very high. This indicated to us that we needed to test elements to increase the open rate, and not adjust the CTA or content in the email body itself.

Here are a few tips on how to make your subject lines more impressive:

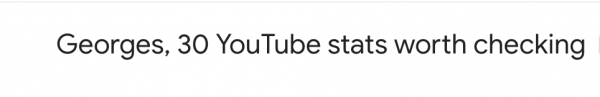

1.1 Personalize your subject line

According to research by Experian Marketing Services, adding a name to the subject line increases the open rate by 29.3%. When an email recipient sees his name in the subject line, it feels more personal and less like spam. Also, people naturally respond more to hearing or reading their own name, so they are more likely to open and read the email. The receiver is more likely to think the email is tailored to his needs and that he has a more personal relationship with the sender. In addition to open rates, personalization helps increase customer retention rates as well.

1.2 Add emojis to your subject line

Based on a report by Experian, brands adding emojis to their subject lines saw a 45% boost in their unique open rates. People love emojis and images, especially when used properly, can help to convey an emotion or an idea much faster and better than text alone. Another benefit of an emoji is that they work great on mobile devices. People can’t or don’t read as much text on a mobile device, so it’s not advised to use more than 30 to 40 characters in subject lines. Using emojis definitely save a lot of space and can still convey just as much when used properly.

Here’s an example of a clock emoji showing users they only have a short period of time before the webinar begins. This drives urgency and entices email subscribers to open the email.

Be mindful when using this tactic as the success will really depend on your target demographics. Millenials respond much better to emojis than those over 45 years old, for instance.

2. Determine how to split test your campaign

You determined your two items for testing but you have to split the test into three different categories. The percentages above reflect how many subscribers will receive each version in the test, and the percentage that will receive the winning version.

When testing subject lines, we usually split it up in this way:

- Subject line A to be sent to 15% of your subscribers.

- Subject line B to be delivered to another 15%.

- The winning version (A or B) to be sent to the remaining 70% of your subscribers list.

These numbers are not arbitrarily chosen, but are based on a few principles you’ll be familiar with if you studied statistics. You want your results to be statistically significant, and in order for that to happen, your sample size must be large enough. Pay attention to the percentages you choose, make sure you’re testing a large enough sample, and tweak this over time to find the optimal set-up for your subscribers.

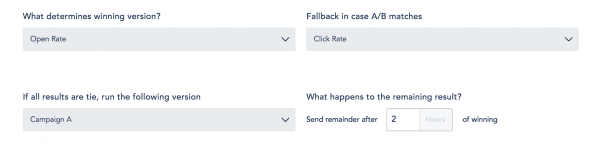

3. Choose your Key Performance Indicators (KPI)

If your chosen element is the subject line, the corresponding metric that defines the winning version is the open rate. What if the open rate is equal for A and B? You have three options. You can choose a secondary element to decide the winner, you can choose the winner yourself, or run another test. An example of a secondary element could be the click through rate (CTR). This indicates if the email was fully read or if subscribers completed the goal and converted. The goal could have been signing up on your landing page, making a purchase, or downloading an eBook. If your secondary metric also ends in equal results, you will need to choose the winner.

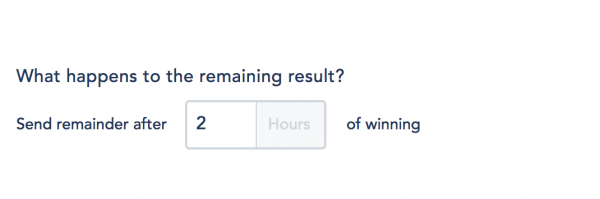

4. Select the timing for sending the winning variation

The last step is to review the results and determine which version has the highest open rates. After the highest open rate version is confirmed, then decide when you want the test to conclude and when you want the remaining subscribers to receive the winning version. For example, you can send the winning variation 2 hours after the results are computed.

Tips when A/B testing the email content

When you A/B test the email content, your KPIs will be different. In this case, the email open rate is only important because it determines the size of your sample. In other words, if only 5 people opened the email, then your A/B test on the content was only run with those 5 who saw it. This sample is too small, of course. You may want to think more about the percentages you chose when setting this A/B test.

After that is done, there are several items in the body of the email you can choose for the test:

- Click through rate: This is the percentage of those who clicked a particular link you placed in the email to direct them to another page.

- Fully read: This measures the percentage of those who read the email in its entirety. Software today can detect this by the length of time between the email being opened and the receiver scrolling down the page.

- Conversion: This is how many of the recipients complete the goal. The goal is the main purpose of your email and could have been to get them to visit a landing page to download a whitepaper or to sign-up to a webinar, or to buy a product.

Here is an example of A/B testing two different contents:

We recommend only changing one element – either the image or the text. This will make it easier to know exactly which item caused the difference in results. This can help you make decisions in the future as you get to know what your subscribers respond to more over time. Other items you can change in the body are the location of your CTAs, social share buttons, logo, size of your footer, colors and so on.

5. Gather data on the winning variation

You’ve A/B tested your content or subject line and determined A was more popular than B. Now what? The most important part of A/B testing is actually what comes next. At this stage, after checking the results, you should analyze them to determine how to use this information to better target and communicate with your subscribers.

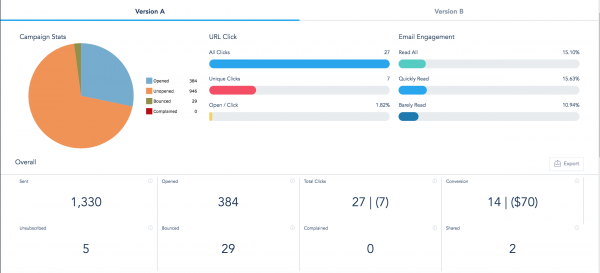

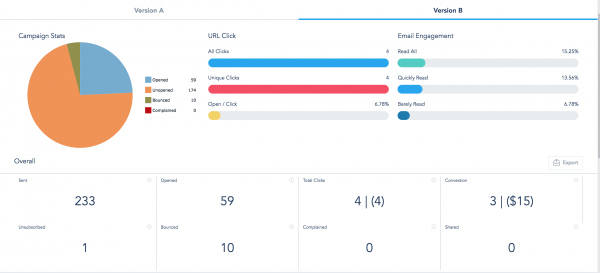

After the time you set in the test, your winning email was delivered to your entire subscribers list. The stats from our test that will be shown in the next image reveals that subject line A, the winning version, was sent to 1,330 subscribers versus version B that went out to 233 subscribers.

Now it’s time to determine WHY version A won over version B. Sometimes the reasons are not very obvious, or the results are even counterintuitive or go against what you thought the results would be. There could be a number of different factors at play. Let’s look at the two subject lines again and compare them.

Subject line A: Top 10 things to consider when choosing marketing technology.

Subject line B: Find out how to choose the right marketing technology.

Can you guess the reason why subject line A won? You can conduct research online to find relevant statistics that researchers have gathered about what makes a subject line, social media post, title or other headline more eye catching than others. Research consistently shows that people like lists and “top 10 things” is more attention grabbing then “find out how.” Some research into more stats like this, and your own results from A/B testing should help you refine content over time to result in progressively higher rates of engagement.

From this analysis, you could try subject lines for future emails like these examples:

– 7 email marketing practices to use today

– 3 ways marketing automation can boost your sales

– 10 digital marketing trends for 2016

You can also dive deeper into the analytics to see how many subscribers opened the email, who opened your email and also conversion. Conversion metrics in VBOUT is measured in dollars and you assign the value per conversion. For example, if you assign $5 for each conversion and you have 17 conversions then your total is $85. In addition, you can check how many of your subscribers bounced, complained or shared your email on their social channels.

Analytics of email variation A

Analytics of email variation B

By following the steps and advice we’ve listed above, you’ll be well on your way to improving email campaign results with A/B testing! The most important aspects include: defining your testing element, executing it the right way, determining your KPI’s, and analyzing the results. If you have other great tips or ways that you’ve used A/B testing to enhance your marketing campaigns, let us know! We’d love to hear how it’s working for you.

Don’t forget to share this article